Live Data Acquisition is the process of extracting volatile information present in the registries, cache, and RAM of digital devices through its normal interface. The volatile information is dynamic in nature and changes with time, therefore, the investigators should collect the data in real time.

Simple actions such as looking through the files on a running computer or booting up the computer have the potential to destroy or modify the available evidence data, as it is not write-protected. Additionally, contamination is harder to control because the tools and commands may change file access dates and times, use shared libraries or DLLs, trigger the execution of malicious software (malware), or—worst case—force a reboot that results in losing of all volatile data. Therefore, the investigators must be very careful while performing the live acquisition process. Volatile information assists in determining a logical timeline of the security incident, network connections, command history, processes running, connected peripherals and devices, as well as the users, logged onto the system.

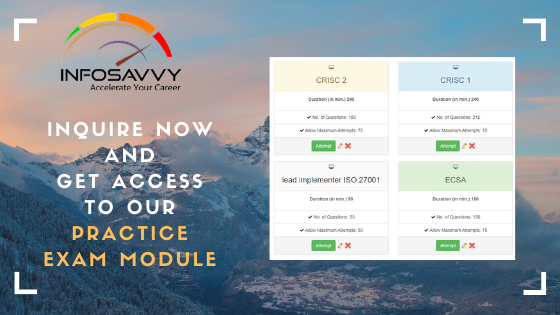

Related Product : Computer Hacking Forensic Investigator | CHFI

Depending on the source, there are the following two types of volatile data:

1. System Information

System information is the information related to a system that can act as evidence in a criminal or security incident. This information includes the current configuration and running state of the suspicious computer. Volatile system information includes system profile (details about configuration), login activity, current system date and time, command history, current system uptime, running processes, open files, startup files, clipboard data, logged on users, DLLs, or shared libraries. The system information also includes critical data stored in slack spaces of hard disk drive.

2. Network Information

Network information is the network related information stored in the suspicious system and connected network devices. Volatile network information includes open connections and ports, routing information and configuration, ARP cache, shared files, services accessed, etc.

3. Order of Volatility

Investigators should always remember that the entire data do not have the same level of volatility and collect the most volatile data at first, during live acquisitions. The order of volatility for a typical computer system is as follows:

- Registers, cache: The information in the registers or the processor cache on the computer exists around for a matter of nanoseconds. They are always changing and are the most volatile data.

- Routing table, process table, kernel statistics, and memory: A routing table, ARP cache, kernel statistics information is in the ordinary memory of the These are a bit less volatile than the information in the registers, with the life span of ten nanoseconds,

- Temporary file systems: Temporary file systems tend to be present for a longer time on the computer compared to routing tables, ARP cache, etc. These systems are eventually over written or changed, sometimes in seconds or minutes later.

- Disk or other storage media: Anything stored on a disk stays for a while. However, sometimes, things could go wrong and erase or write over that data. Therefore, disk data are also volatile with a lifespan of some minutes.

- Remote logging and monitoring data related to the target system: The data that goes through a firewall generates logs in a router or in a switch. The system might store these logs somewhere eke. The problem is that these Logs can over write themselves, sometimes a day later, an hour later, or a week later. However, generally they are less volatile than a hard drive.

- Physical configuration, network topology: Physical configuration and network topology are less volatile and have more life span than some other logs.

- Archival media: A DVD-ROM, a CD-ROM or a tape can have the least volatile data because the digital information is not going to change in such data sources automatically any time unless damaged under a physical force.

4. Common Mistakes in Volatile Data Collection

The investigators should collect the volatile data carefully because any mistake would result in permanent data loss.

Also Read : Understanding Data Acquisition

5. Volatile Data Collection Methodology

The volatile data collection plays a major role in the crime scene investigation. To ensure no loss occur during the collection of critical evidence, the investigators should follow the proper methodology and provide a documented approach for performing activities in a responsible manner.

The step-by-step procedure of the volatile data collection methodology:

Step 1: Incident Response Preparation

Eliminating or anticipating every type of security incident or threat is not possible practically. However, to collect all kinds of volatile data, responders can be prepared to react to the security incident successfully.

The following should be ready before an incident occurs:

– A first responder toolkit (responsive disk)

– An incident response team (IRT) or designated first responder

– Forensic-related policies that allow forensic data collection

Step 2: Incident Documentation

Ensure to store the logs and profiles in organized and readable format. For e.g., use naming conventions for forensic tool output, record time stamps of log activities and include the identity of the forensic investigator. Document all the information about the security incident needs and maintain a logbook to record all actions during the forensic collection. Using the first responder toolkit logbook helps to choose the best tools for the investigation.

Step 3: Policy Verification

Ensure that the actions planned do not violate the existing network and computer usage policies and any rights of the registered owner or user as well. Points to consider for policy verification:

- Read and examine all the policies signed by the user of the suspicious computer.

- Determine the forensic capabilities and limitations of the investigator by determining the legal rights (including a review of federal statutes) of the user.

Step 4: Volatile Data Collection Strategy

Security incidents are not similar. The first responder toolkit logbook and the questions from the graphic to create the volatile data collection strategy that suits the situation and leaves a negligible amount of footprint on the suspicious system should be used.

Devise a strategy based on considerations such as the type of volatile data, the source of the data, type of media used, type of connection, etc. Make sure to have enough space to copy the complete information.

Step 5: Volatile Data Collection Setup

- Establish a trusted command shell: Do not open or use a command shell or terminal of the suspicious system. This action minimizes the footprint on the suspicious system and stops any kind of malware to trigger on the system.

- Establish the transmission and storage method; Identify and record the data transmission process from the live suspicious computer to the remote data collection system, as there will not be enough space on the responsive disk to collect forensic tool output. For e.g., Netcat and Cryptcat can transmit data remotely via a network.

- Ensure the integrity of forensic tool output: Compute an MD5 hash of the forensic tool output to ensure the integrity and admissibility.

Step 6: Volatile Data Collection Process

- Record the time, date, and command history of the system

- To establish an audit trail generate dates and times while executing each forensic tool or command

- Start a command history to document all the forensic collection activities. Collect all possible volatile information from the system and network

- Do not shut down or restart a system under investigation until all relevant volatile data has been recorded

- Maintain a log of all actions conducted on a running machine

- Photograph the screen of the running system to document its state

- identify the operating system (OS) running on the suspect machine

- Note system date, time and command history, if shown on screen, and record with the current actual time

- Check the system for the use of whole disk or file encryption

- Do not use the administrative utilities on the compromised system during an investigation, and particularly be cautious when running diagnostic utilities

- As each forensic tool or command is executed, generate the date and time to establish an audit trail

- Dump the RAM from the system to a forensically sterile removable storage device

- Collect other volatile OS data and save to a removable storage device

- Determine evidence seizure method (of hardware and any additional artifacts on the hard drive that may be determined to be of evidentiary value)

- Complete a full report documenting all steps and actions taken

Questions related to this topic

- What are the three best forensic tools?

- What are the forensic tools?

- What type of acquisition is typically done on a computer seized during a police raid?

- Is FTK Toolkit free?

This Blog Article is posted by

Infosavvy, 2nd Floor, Sai Niketan, Chandavalkar Road Opp. Gora Gandhi Hotel, Above Jumbo King, beside Speakwell Institute, Borivali West, Mumbai, Maharashtra 400092

Contact us – www.info-savvy.com